by James A. Bacon

John Butcher, writing on Cranky’s Blog, has been on a tear recently as he’s plowed through the reams of “Student Growth Percentile” (SGP) data that the Virginia Department of Education has released recently under the prodding of the federal government.

The problems with using raw Standards of Learning (SOL) data to rate teachers, principals, schools and school divisions are well known. Roughly 60% of the variability in SOL scores between schools reflects the socio-economic status of the student body. It is patently unreasonable to compare the educational efficacy of a school teaching poor, inner-city kids with a school teaching affluent suburbanites on SOL scores alone. But the SGP gets around that problem by calculating the improvement in scores over time. Improvement is correlated with the quality of teaching and administration, not socioeconomic status.

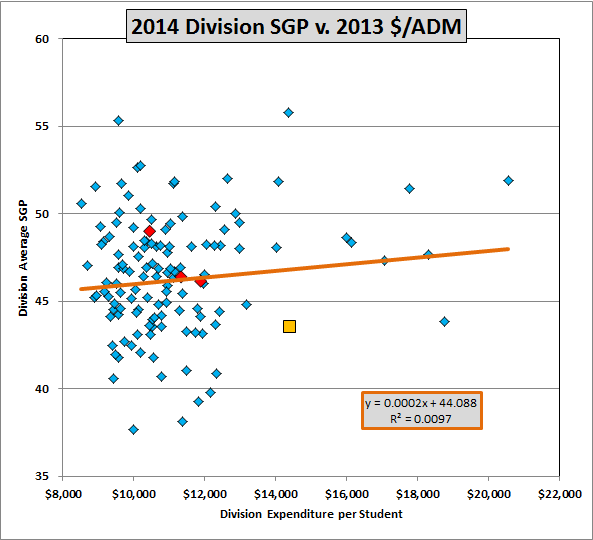

In one recent post, Cranky… er, I mean John… demonstrated that there is almost no correlation between SGP and the divisional expenditure of money. The correlation coefficient between divisional expenditure per student and average SGP is less than 1% — meaning that less than 1% of the variability between school divisions can be explained by how much money they spend.

For details, read the full post here.

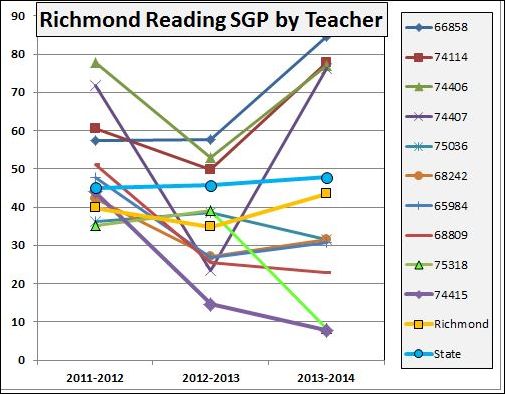

John then took VDOE to task for suppressing the identity of individual teachers. The data anonymizes the data, identifying teachers only by a five-digit number. Delving into the City of Richmond data, John shows the wide variability in the ability of teachers to teach.

The chart above shows a representative sampling of teacher SGPs. John homes in on Teacher 74415, seen in the purple line above:

The principal who allowed 25 kids (we have SGPs for 24 of the 25) to be subjected to this educational malpractice in 2014 should have been fired. Yet VDOE deliberately makes it impossible for Richmond’s parents to know whether this situation has been corrected or whether, as is almost certain, another batch of kids is being similarly afflicted with this awful teacher.

Read the full post here.

Lastly, John makes an intriguing suggestion — using the SGP data to rate the quality of teachers from Virginia’s schools of education.

Just think, VDOE now can measure how well each college’s graduates perform as fledgling teachers and how quickly they improve (or not) in the job. In this time of increasing college costs, those data would be important for anyone considering a career in education. And the data should help our school divisions make hiring decisions.

In addition, VDOE could assess the effectiveness of the teacher training at VCU, which is spending $90,000 a year of your and my tax money to hire Richmond’s failed Superintendent as an Associate Professor in “Educational Leadership.” Wouldn’t it be interesting to see whether that kind of “leadership” can produce capable teachers (albeit it produced an educational disaster in Richmond).

Go, Cranky, go!